By Varada Iyengar, VP of Digital Product Engineering and Ryo Hang, Senior Solution Architect

Executive Summary

Today many enterprises are migrating all or parts of their critical workloads and assets to the cloud in their Digital Transformation journey. Primary focus of the initial Cloud Enablement journey has been on migrating and re-platforming their core infrastructure and platforms.

Our clients are now starting to embark on the next phase of this cloud journey by extending and leveraging investments in cloud initiatives to their application portfolio(s). Building cloud-native applications and further simplifying and abstracting infrastructure layers are becoming imperative to improving speed of delivery, performance, availability, and quality of services – critical performance indicators for high performing engineering organizations. With increased focus on infrastructure abstraction, serverless computing has become central to implementing and deploying business logic.

Discussed below are implementation details of serverless frameworks in the Amazon Web Services (AWS) cloud context. We will address when you should leverage serverless architecture, domains which are typically not suited for serverless implementations, and typical use cases and examples for both.

We conclude by highlighting risks, challenges, and issues you must prepare to mitigate as you embark on implementing serverless architecture patterns.

Serverless Architecture in AWS

Serverless architecture is a way to build and run applications and services without having to provision and manage infrastructure. It still runs on underlying servers, however, eliminates substantial overhead from development teams. From the time AWS Lambda was launched in 2014, it has been integrated with most cloud services. Thus, serverless architecture on the AWS cloud has become more viable for commercial grade enterprise applications.

Our serverless application development experience points to key benefits of serverless framework adoption:

- Operational Efficiency

Serverless architecture completely eliminates the need for an infrastructure and operations team to provision resources, manage scalability, or worry about availability and fault tolerance, allowing product organizations to focus on building and delivering applications from day one. We no longer need to plan, analyze, design, or build / configure for non-functional requirements around capacity, scalability, security, disaster recovery (DR), upgrades, etc. - Cost Efficiency

Serverless architecture is a true pay as you go model – what you use is what you pay for. In a serverless world, applications scale with their actual usage, we never overprovision or deprovision anything. There are no idle compute resources to repurpose or manage. - Scalability / Availability

With serverless architecture, applications scale automatically; users can always experience the best performance. You don’t have to “guesstimate” peak lead or be concerned about temporary spikes in traffic and subsequent load on the systems as AWS Lambda includes load balancing and autoscaling features. - Speed of Delivery

Developers need only focus on application development, reducing time to think about operation and IT infrastructure. Serverless frameworks provide more agility in terms of application development. AWS has several tools like CloudFormation, Serverless Application Model (SAM), and local containers to speed up deployment and development. - Ease of Deployment

Serverless solutions are like the “Skynet” in the Terminator movie. Using tools like SAM, CloudFormation, and CodePipeline / Jenkins, you can build an automated process for your continuous integration, continuous delivery and continuous deployment with the option of “human in the loop” in these processes.

When to Use Serverless Solutions

Front-end Development & Hosting

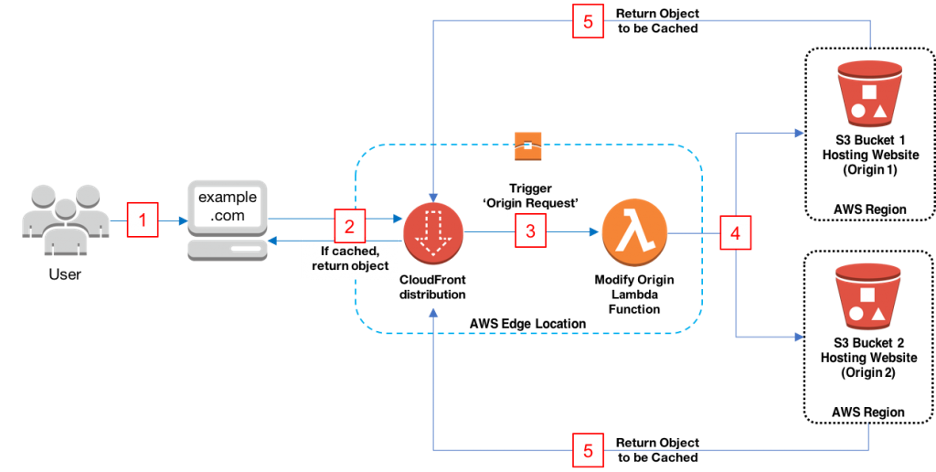

With managed AWS services like Simple Storage Service (S3) and CloudFront, the Paradigm team has helped customers move from traditional server hosting to AWS managed services (serverless), reducing operation overhead and improving content delivery performance with CloudFront edge and scalability. Our customers are now able to deliver a front-end application to different regions with very low latency.

Additionally, we can leverage the frontend JavaScript software development kit (SDK) to speed up integration with resources (e.g. Cognito for authentication) deployed via other Infrastructure as Code tools. We can customize header and delivery logic through Lambda integration.

High Scalable API Service with Minimal Operation Support

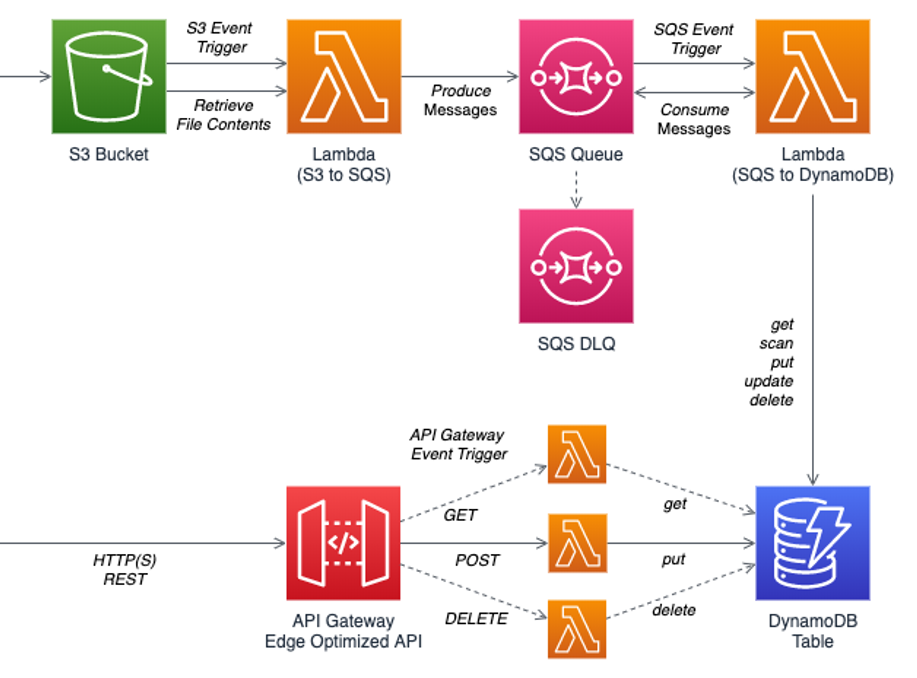

Building business application programming interfaces (APIs) is essentially the same between traditional frameworks (i.e., .Net and Spring) and serverless frameworks. However, maintaining and supporting serverless applications is effortless compared to applications on Amazon Elastic Compute Cloud (EC2) or container clusters. Together, Route53 and API Gateway can provide a set of scalable API endpoints which can be deployed across different environments. API Gateway can set custom response errors as well as model validations, then dispatch requests to the Lambda handler, which serves identically to the controller method in traditional application frameworks. Later, we can persist data into either DynamoDB or Aurora serverless databases, which can asynchronously trigger a Lambda to react on any data change for downstream processing.

Async Tasks – Email, SMS, Chatbot

Serverless architecture is mostly event-driven, but Lambda functions are separate – they can be triggered asynchronously by other Lambda functions, EventBridge events, S3 events, DynamoDB Streams, etc. For instance, an asynchronous Lambda can be responsible for sending SMS confirmation upon file changes in S3 bucket.

Failure handling is critical in a distributed asynchronous system; for asynchronous Lambdas, we use their Dead Letter Queue (DLQ), which allows us to:

- Configure an alarm for any messages delivered to a DLQ;

- Examine logs for exceptions that might have caused messages to be delivered to a DLQ;

- Analyze the contents of messages delivered to a DLQ to diagnose software or the producer’s or consumer’s hardware issues; and

- Determine whether you have given your consumer sufficient time to process messages.

Standard Authentication & Authorization

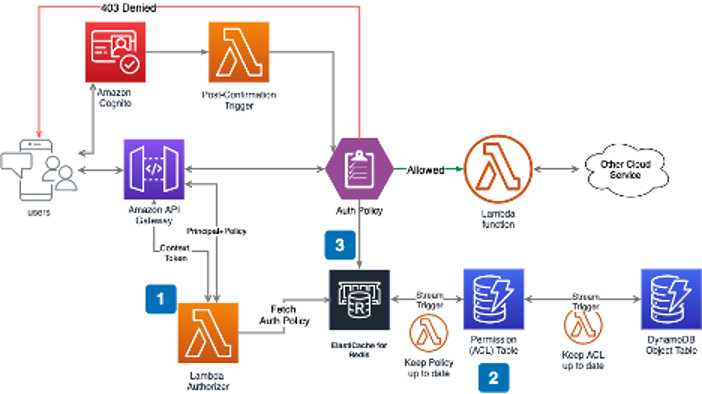

Addressing permission control is inevitable when building a complex web service such as a serverless application. Amazon Cognito is a powerful authentication and authorization service, managed by AWS and often combined with Amazon API Gateway and AWS Lambda, to build secure serverless web services.

The Paradigm team wrote AWS Lambda functions and integrated them with Amazon API Gateway, Amazon Cognito, and Amazon DynamoDB to build a sophisticated object-based authorization for one of our customers. Then we integrated Amazon ElastiCache for Redis for scaling.

Amazon Cognito is a managed service for storing user lists. It also enables custom login, signup workflow, and identity provider (IDP) federation to integrate an organization’s existing active directory.

The Lambda authorizer plays a key role in allowing and denying requests to API Gateway. You can find the blueprint of an AWS Lambda authorizer among the most popular language on GitHub. Our code repository was built on top of that. Access control lists (ACL) can be built and maintained in either DynamoDB or Aurora databases. We selected DynamoDB in this example.

These GitHub repositories also include code samples and references.

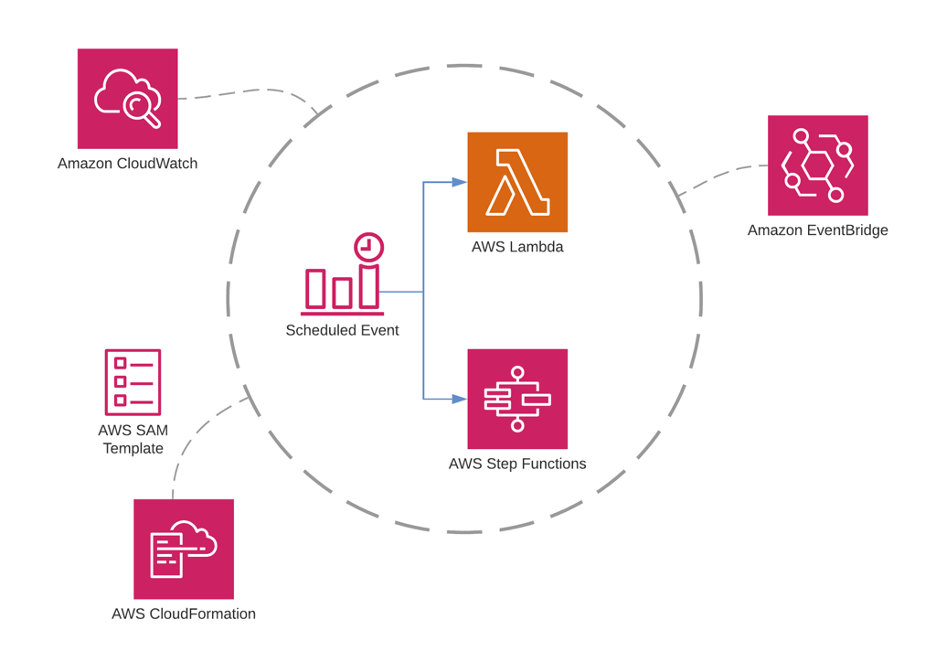

Scheduling Job

Scheduling jobs are low hanging fruit to migrate to serverless Lambda. Organizations used to set up cron jobs or program triggers to run scheduled jobs on containers or servers, which can be a waste when jobs are not running. With CPU memory, everything is sitting idle. AWS allows the integration of Lambda and Step functions with CloudWatch event rules to do the same thing without worrying about infrastructure – CloudWatch event rules syntax is very similar to crontab syntax – we then move the running jobs into Lambda or Step function. There are many internal events in AWS services to trigger CloudWatch, CloudFormation, and EventBridge events.

A little caveat to mention, Lambda function has duration limits. When running a long-running job, it’s better to break it down into mini batches or assemble a workflow in the Step function – a more scalable approach in the long run.

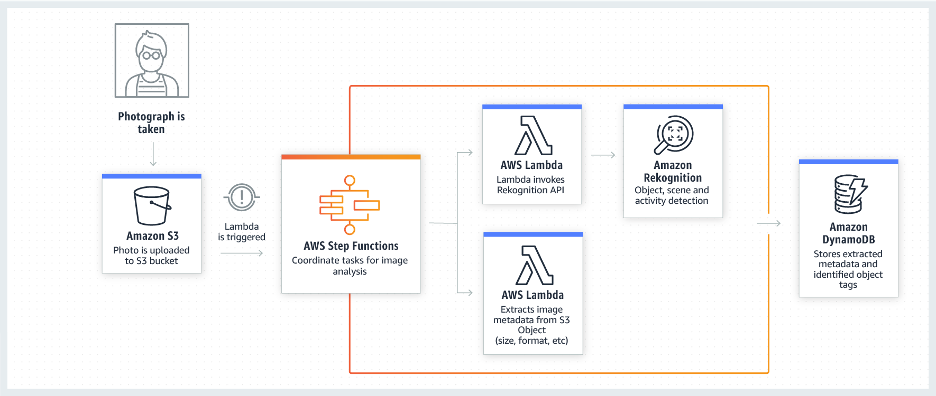

Data Process & Media Transformation with State Machine

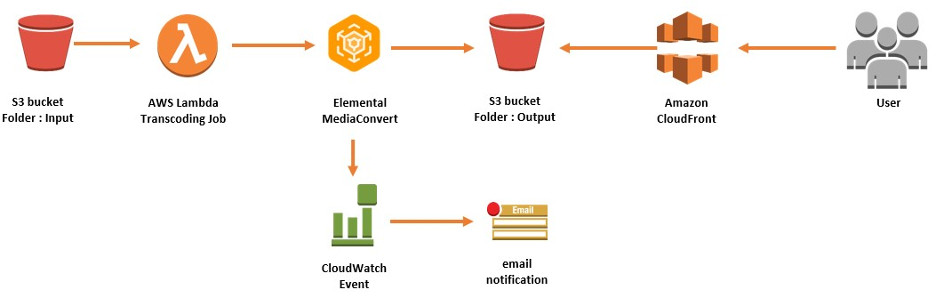

Data process and media transformation commonly work like a pipeline, such as a batch job on-demand or scheduling with more complicated logic and error handling. Unlike web services with up to 24×7 availability, it’s a workload that can be run with less priority. AWS provides several managed services (serverless) to process data (i.e., EMR, AWS Glue, Athena, etc.) and media (i.e., Elemental Live, MediaConnect, MediaLive, Media Package, etc.).

In some situations, we have to combine services together as a workflow to solve a suite of problems, which might get quite complicated. We orchestrate these tools together in Step function.

We declare our state machine through CloudFormation – every subsequent step and state, every expected or unexpected result – and attach some native actions (like wait or choice) or a Lambda / AWS Glue / AWS MediaConverter. We can then see our machine run live and visualize (with logs) through the AWS interface. At each of those steps we can define retry and failure handling.

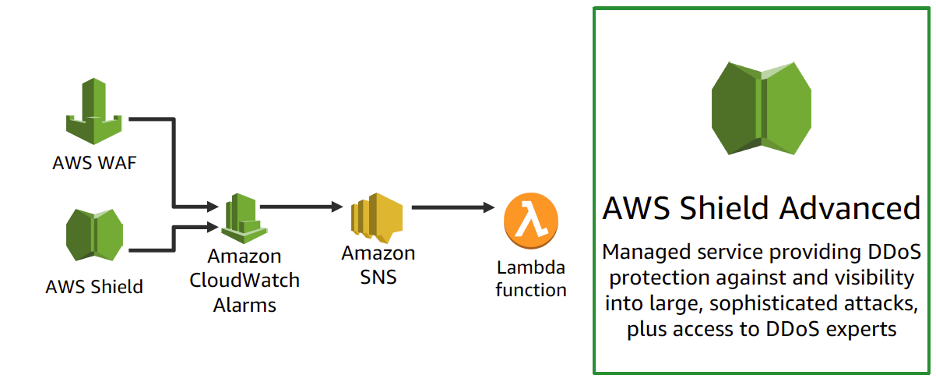

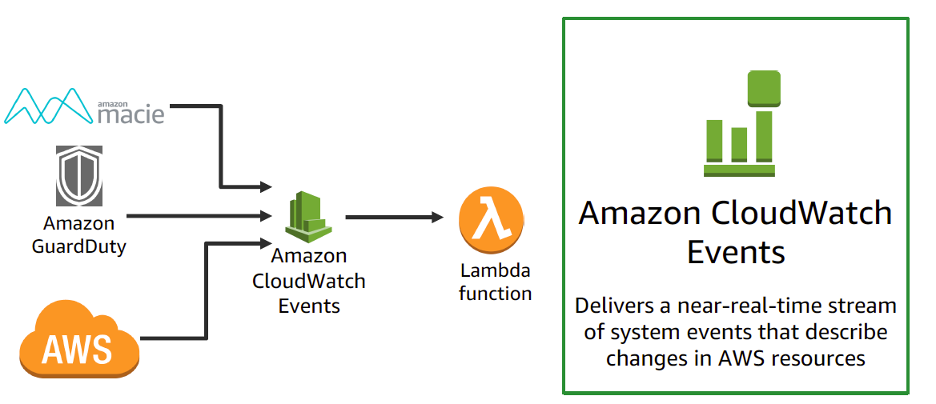

Custom Cloud Security and Monitoring

Many tools are available for application monitoring such as NewRelic, AppDynamic, and cloud infrastructure monitoring DataDog, however, depending on the application or cloud infrastructure, there are many custom requirements.

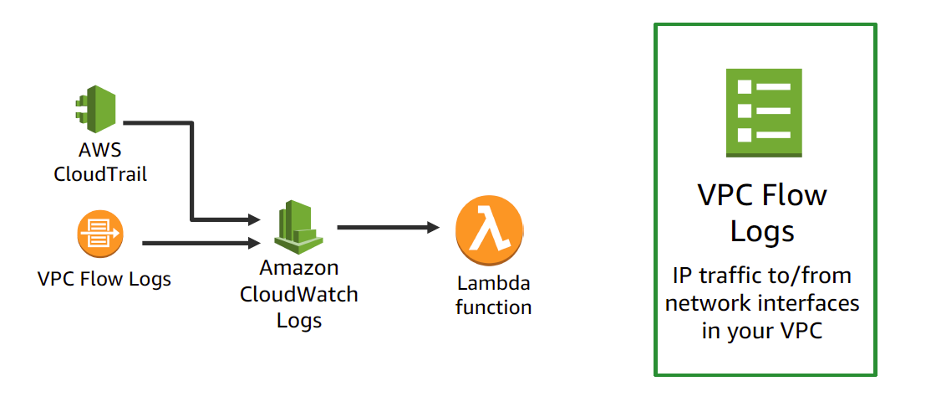

Let’s say you need to internally look at a certain CloudWatch metric or penetrate a certain URL. Lambda functions can be integrated with CloudWatch, GuardDuty, Macie, and CloudTrial to actively monitor metrics and security. We don’t have to worry about the underlying infrastructure for monitoring tools. Operations or DevOps teams just need to focus on developing tools to increase infrastructure efficiency and security. For example, Lambda functions can integrate with CloudTrial to proactively monitor certain API calls and reverse the infrastructure changes or notify the stakeholder. You may also integrate Lambda function and Amazon Simple Notification Service (SNS) with third party tools. The Paradigm team leveraged a third party to perform routine intrusive scanning – with an integrated Lambda function we can automatically correct any issues or notify the team member.

When Not to Use Serverless Solutions

Latency

Critical web service requires a quick response for each API request. The overhead in starting a Lambda invocation – commonly called a cold-start – consists of two components. The first is the time taken to set up the execution environment for your function’s code, which is entirely controlled by AWS. The second is the code initialization duration, which is managed by the developer. In AWS’ analysis of production usage, this causes by far the largest share of overall cold start latency. There is a fairly simple workaround that many serverless developers use: keeping functions warm by hitting them at regular intervals.

This is mostly effective for smaller functions. Things get more complicated with larger functions or relatively complicated workflows.

To minimize cold start times, keep in mind:

- Application Architecture – Keep serverless functions small and focused; cold start times increase linearly with memory and code size

- Provisioned Concurrency targets both causes of cold-start latency

- Choice of Language – Python and Go can considerably lower cold start times, whereas C# and Java notoriously have the highest cold start times

- Virtual Private Clouds – Cold start times increase due to extra overhead of provisioning networking resources

Security

AWS’ Shared Responsibility Model states that Amazon is responsible for the security of its cloud, but that customers are responsible for security in the cloud – meaning that it is your responsibility to setup IAM roles, configure access permissions, and deploy secure code.

Paradigm cares about the following features when implementing serverless architecture:

- Lambda function policies and Execution Role policies

- Lambda event source policy and configuration

- AWS resource policy and configuration which interact with Lambda function

Handle Timeout Limits

One of the most important configurations for an AWS Lambda function is the timeout value. The AWS Lambda timeout dictates how long a function invocation can last before it’s forcibly terminated by the Lambda service (currently set to 15 minutes as maximum). There are some workarounds for handling long running jobs, however, Lambda is not designed for that. When we design an application, we build with the Lambda running limits in mind. We create mini batches or decouple the logic so that each Lambda running time is just seconds to a few minutes at most.

Vendor Lock-in

Allowing a vendor to provide all backend services for an application inevitably increases reliance on that vendor. Setting up a serverless architecture with one vendor can make it difficult to switch if necessary. However, when considering vendor lock-in cost, we also have to consider development cost savings. In most cases, we have to balance development cost versus switching cost.

Conclusion

AWS Lambda functions and their implementation of serverless architecture patterns provide an exciting set of tools and capabilities to create products and services that are responsive, resilient, elastic and message driven while improving time to market, speed, and agility. They allow you achieve this while creating a strong developer experience in a polyglot environment. Additionally, you can simplify and reduce operations.

Contact us to talk live with one of our AWS Serverless Solutions Architects and visit https://pt-corp.com/solutions/digital-product-engineering/ to learn more about our Digital Product Engineering capabilities.